We’re proud to present the results of our Adverse Events (AE) Large Language Model (LLM), called Sentinel.

At Stethy AI, our mission is simple but critical: every healthcare and life sciences workflow, clinical or administrative, must have patient safety at its core. Sentinel is purpose-built to detect and classify adverse events, compliance risks, and patient safety issues in unstructured workflows like emails.

Accuracy is not enough. Healthcare demands trust, reliability, and validation by subject-matter experts. Sentinel is designed specifically for the regulatory, clinical, and compliance realities of healthcare and life sciences. This is more than a benchmark win, it is a proof point: healthcare automation cannot move forward responsibly without a dedicated safety layer for AE detection.

With this in mind, we benchmarked Sentinel against leading commercial large language models to test how well they safeguard patient safety and compliance. Importantly, as healthcare and life sciences continue to automate, every workflow will need this type of safety layer for AE detection to ensure regulatory compliance and protect patients from harm.

To evaluate Sentinel, we compared its performance against commercial LLMs across three datasets:

A collection of ~2,888 anonymized emails provided by clients, with ground-truth labels curated by subject-matter experts. Sentinel was used to identify and triage adverse events within a high-volume mailbox to automate the pharmacovigilance process for a well-known large life sciences organization. This dataset is imbalanced toward the “No AE” class, reflecting real-world scenarios. Due to privacy concerns and NDA agreements, this dataset has been kept internal and will be referred to as the “Internal Dataset.”

The U.S. FDA’s Vaccine Adverse Events Reporting System. This dataset contains ~2,000 reports of adverse events during vaccine administration. Since all records are true AEs, the benchmark highlights which models capture the largest portion of adverse events. This is an open dataset available to the public.

A set of ~1,500 patient drug reviews sourced from Drugs.com, labeled as “AE” or “No AE” by subject-matter experts. This dataset is widely used in research for evaluating machine learning in pharmacovigilance and provides a real-world benchmark of AE detection performance.

Stethy AE LLM outperforms well-known LLMs in accuracy. While GPT-5 and Claude Opus 4 both scored about 81% accuracy, and Gemini 2.5 Pro lagged at 74%, our model achieved an industry-leading 87% accuracy. This difference highlights the value of a domain-specific model built with patient safety in mind, consistently identifying side effects and compliance risks more reliably than broader models.

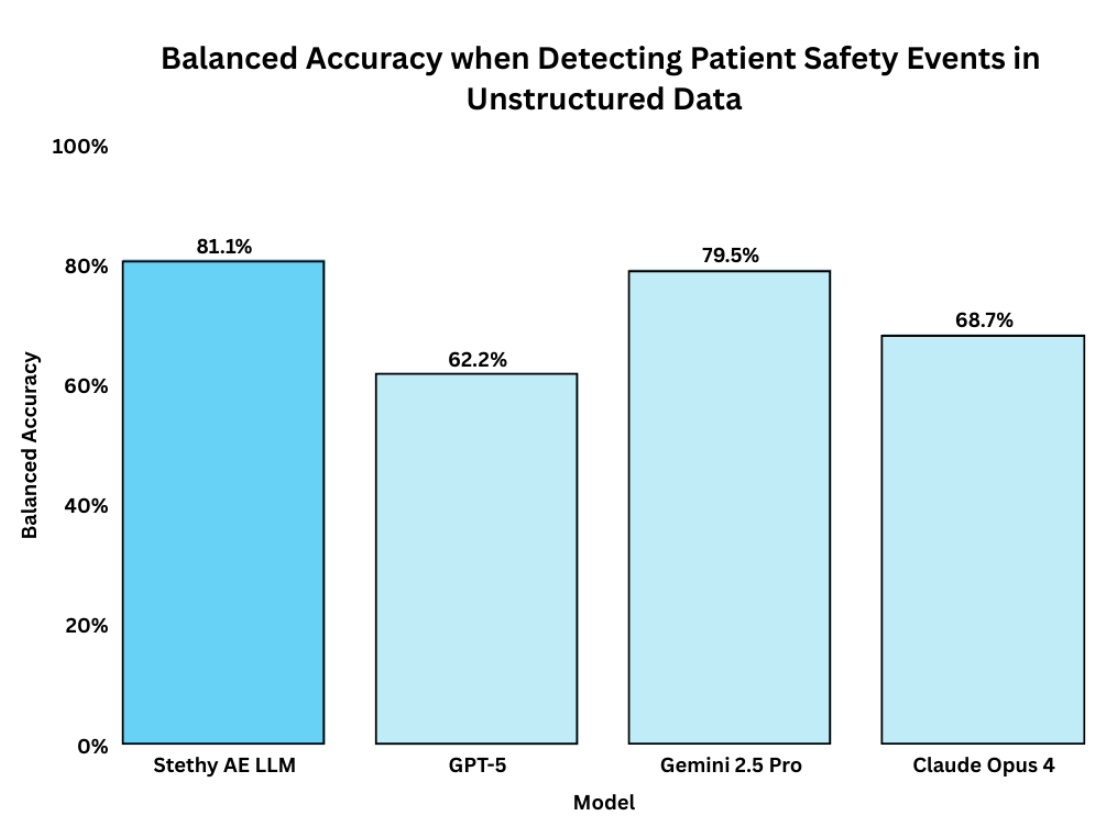

Accuracy alone is not enough. Balanced accuracy looks at performance across both sides: correctly catching true adverse events and correctly ignoring harmless cases. Again, Stethy AE LLM leads at 81.08%, well ahead of GPT-5 (62.24%), Gemini 2.5 Pro (79.54%), and Claude Opus 4 (68.67%). This shows Sentinel isn’t just accurate overall, it is reliable in handling every type of case.

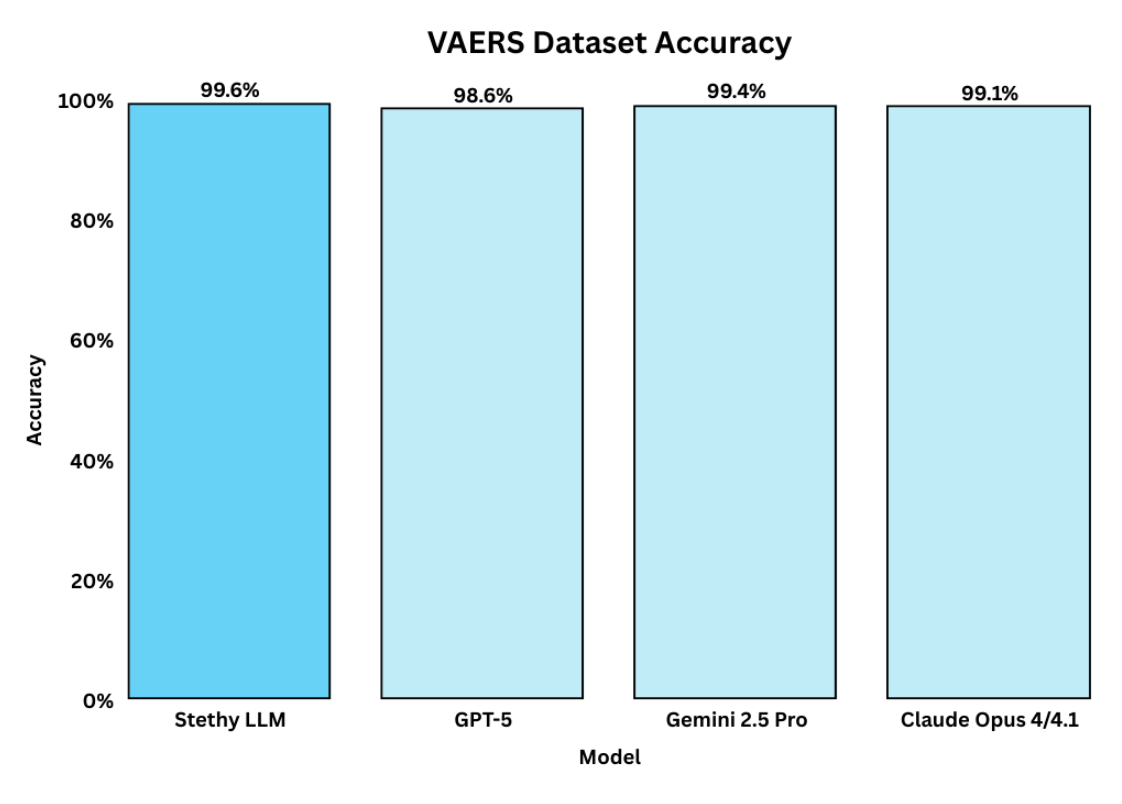

On the VAERS dataset, all models perform at a high level, but Sentinel still distinguishes itself with 99.6% accuracy, placing it above GPT-5 (98.6%) and Claude Opus 4/4.1 (99.1%), and Gemini 2.5 Pro (99.4%). Therefore, while commercial models are strong on structured benchmark data, Stethy delivers the same (or better) performance, while being specifically designed to address the unique demands of healthcare and patient safety.

On the Drugs.com dataset, Sentinel reached 97.5% accuracy, well above GPT-5 (70.9%), Claude Opus 4 (81.3%), and Gemini 2.5 Pro (72.2%). The same result held for balanced accuracy, where Sentinel again scored 97.5% while others remained lower. This demonstrates Stethy’s strength in both detecting true adverse events and avoiding false alarms.

Together, these results confirm that Stethy AE LLM is not only more accurate but also more context-aware than general-purpose AI models. By combining validated AI with subject-matter expertise, we deliver technology that can be trusted to safeguard patients and strengthen operations across healthcare and the life sciences.

Looking ahead, as healthcare and life sciences continue to automate, every workflow will require this type of safety layer for AE detection to ensure regulatory compliance and protect patients from harm.

Sentinel can be deployed across the full spectrum of operations: in pharmacovigilance to triage patient safety reports and emails at scale, in regulatory affairs to validate submissions and safety narratives, in medical information to flag side effects hidden in inquiries, in clinical operations to detect protocol deviations and safety risks in site communications or EMR notes, and in quality and compliance teams to surface issues in product complaints, deviations, or audit records. Even hospital operations can benefit, with patient feedback, discharge summaries, and internal communications all safeguarded by automated oversight.

This is more than a benchmark win. It is a proof point: healthcare automation cannot move forward responsibly without a dedicated safety layer for AE detection. At Stethy, we believe that AI in healthcare must be both specialized and safe, and these findings demonstrate our leadership in advancing that standard.